From thoughts to words: How AI deciphers neural signals to help a man with ALS speak

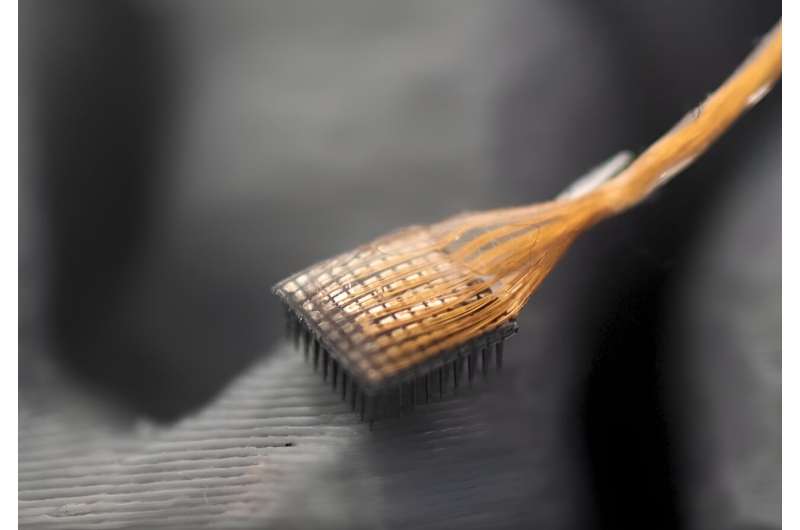

An array of 64 electrodes that embed into brain tissue records neural signals. Credit ranking: UC Davis Neatly being

Brain-computer interfaces are a groundbreaking technology that may maybe relieve haunted folks web suggestions they’ve misplaced, be pleased shifting a hand. These devices file signals from the brain and decipher the actual person’s supposed action, bypassing damaged or degraded nerves that may maybe in most cases transmit those brain signals to manipulate muscle tissues.

Since 2006, demonstrations of brain-computer interfaces in humans have basically thinking about restoring arm and hand actions by enabling folks to manipulate computer cursors or robotic arms. Lately, researchers have begun creating speech brain-computer interfaces to restore dialog for fogeys that can’t focus on.

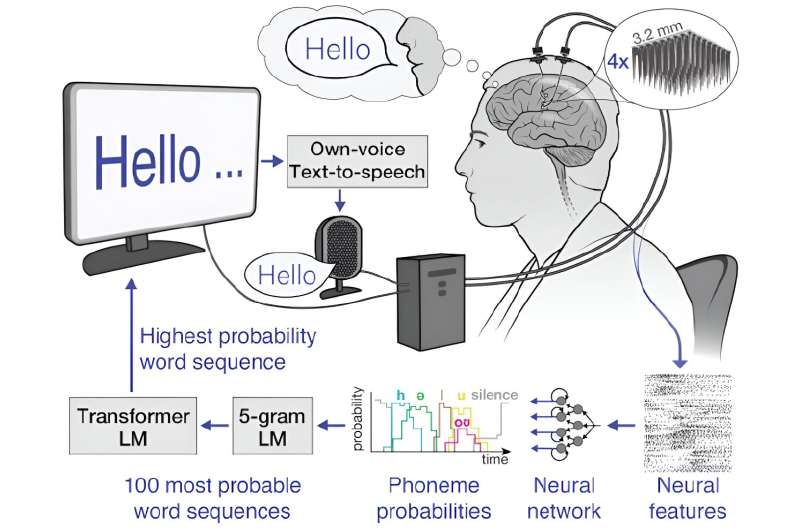

Because the actual person makes an are attempting to chat, these brain-computer interfaces file the actual person’s queer brain signals associated to attempted muscle actions for speaking and then translate them into words. These words can then be displayed as text on a show masks or spoken aloud the use of text-to-speech tool.

I’m a researcher within the Neuroprosthetics Lab at the University of California, Davis, which is segment of the BrainGate2 clinical trial.

My colleagues and I currently demonstrated a speech brain-computer interface that deciphers the attempted speech of a man with ALS, or amyotrophic lateral sclerosis, additionally called Lou Gehrig’s disease. The interface converts neural signals into text with over 97% accuracy. Key to our machine is a strategy of man-made intelligence language models—synthetic neural networks that relieve elaborate natural ones.

Recording brain signals

Step one in our speech brain-computer interface is recording brain signals. There are various sources of brain signals, just a few of which require surgical design to file. Surgically implanted recording devices can capture excessive-quality brain signals because they’re positioned closer to neurons, ensuing in stronger signals with less interference. These neural recording devices consist of grids of electrodes positioned on the brain’s ground or electrodes implanted at present into brain tissue.

In our stare, we frequent electrode arrays surgically positioned within the speech motor cortex, the segment of the brain that controls muscle tissues associated to speech, of the participant, Casey Harrell. We recorded neural exercise from 256 electrodes as Harrell attempted to focus on.

Decoding brain signals

The next divulge is relating the complex brain signals to the words the actual person is making an are attempting to reveal.

One attain is to method neural exercise patterns on to spoken words. This attain requires recording brain signals comparable to each and every be conscious extra than one times to name the frequent relationship between neural exercise and particular words.

Whereas this method works effectively for little vocabularies, as demonstrated in a 2021 stare with a 50-be conscious vocabulary, it becomes impractical for higher ones. Factor in asking the brain-computer interface particular person to are attempting to reveal each and every be conscious within the dictionary extra than one times—it can maybe lift months, and it aloof would now now not work for stamp spanking contemporary words.

As a alternative, we use an alternative method: mapping brain signals to phonemes, the fundamental devices of sound that fabricate up words. In English, there are 39 phonemes, including ch, er, oo, pl and sh, which will additionally be blended to fabricate any be conscious.

We can measure the neural exercise associated to each and every phoneme extra than one times dazzling by asking the participant to read just a few sentences aloud. By accurately mapping neural exercise to phonemes, we can assemble them into any English be conscious, even ones the machine wasn’t explicitly skilled with.

To method brain signals to phonemes, we use superior machine learning models. These models are in particular effectively-suited to this activity on account of their skill to search out patterns in tidy portions of complex information that could be very now now not likely for humans to discern.

Specialise in those models as colossal-excellent listeners that may maybe decide out necessary information from noisy brain signals, phenomenal comparable to you may maybe also concentrate on a dialog in a crowded room. The use of these models, we had been in a space to decipher phoneme sequences for the period of attempted speech with over 90% accuracy.

From phonemes to words

Once now we have the deciphered phoneme sequences, we have to at all times convert them into words and sentences. This is appealing, especially if the deciphered phoneme sequence is now now not actually perfectly appropriate. To solve this puzzle, we use two complementary forms of machine learning language models.

How the UC Davis speech brain-computer interface deciphers neural exercise and turns them into words. Credit ranking: UC Davis Neatly being

The predominant is n-gram language models, which predict which be conscious is maybe to be conscious a method of “n” words. We skilled a 5-gram, or five-be conscious, language model on millions of sentences to predict the possibility of a be conscious consistent with the old four words, taking pictures native context and customary phrases. As an example, after “I’m very correct,” it can maybe also counsel “at the present time” as extra likely than “potato.”

The use of this model, we convert our phoneme sequences into the 100 maybe be conscious sequences, each and every with an associated likelihood.

The 2nd is tidy language models, which vitality AI chatbots and additionally predict which words maybe be conscious others. We use tidy language models to refine our picks. These models, skilled on immense portions of various text, have a broader figuring out of language structure and meaning. They relieve us decide which of our 100 candidate sentences makes basically the most sense in a wider context.

By carefully balancing possibilities from the n-gram model, the tidy language model and our preliminary phoneme predictions, we can fabricate a extremely educated wager about what the brain-computer interface particular person is making an are attempting to reveal. This multistep direction of permits us to handle the uncertainties in phoneme decoding and create coherent, contextually acceptable sentences.

Proper-world advantages

In be conscious, this speech decoding method has been remarkably profitable. Now we have enabled Casey Harrell, a man with ALS, to “focus on” with over 97% accuracy the use of dazzling his solutions. This step forward permits him to with out divulge focus on with his family and traffic for the predominant time in years, all within the comfort of his be pleased home.

Speech brain-computer interfaces portray a necessary step forward in restoring dialog. As we proceed to refine these devices, they retain the promise of giving a yell to folks that have misplaced the flexibility to focus on, reconnecting them with their family and the world spherical them.

Nonetheless, challenges remain, comparable to making the technology extra accessible, moveable and sturdy over years of use. Despite those hurdles, speech brain-computer interfaces are a extremely effective instance of how science and technology can method together to resolve complex problems and dramatically toughen folks’s lives.

This article is republished from The Dialog below a Creative Commons license. Learn the authentic article.![]()

Quotation:

From solutions to words: How AI deciphers neural signals to relieve a man with ALS focus on (2024, August 24)

retrieved 25 August 2024

from https://medicalxpress.com/information/2024-08-solutions-words-ai-deciphers-neural.html

This myth is topic to copyright. Other than any dazzling dealing for the plot of non-public stare or learn, no

segment may maybe even be reproduced with out the written permission. The insist material is supplied for information suggestions handiest.